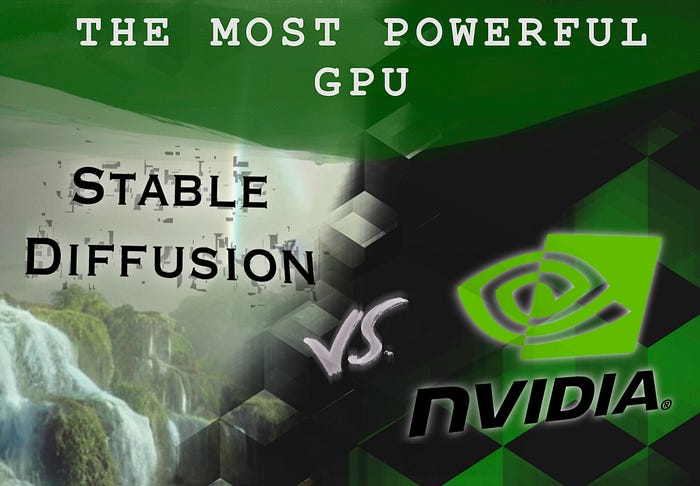

Stable Diffusion Vs. The most powerful GPU. NVIDIA A100.

How fast do you think Stable Diffusion will run on a 20.000 dollar GPU? 100% GPU and 72125MiB / 81920MiB VRAM utilization during my final test.

Earlier this week, I published a short on my YouTube channel explaining how to run Stable diffusion locally on an Apple silicon laptop or workstation computer, allowing anyone with those machines to generate as many images as they want for absolutely FREE. It’s really quite amazing.

For those of you that haven’t heard about Stable Diffusion

it’s an open source, free-to-use text-to-image generation AI model that took the internet by storm on the 22 of august 2022.

That means that you write something, and Stable diffusion will draw it.

Take an astronaut riding a horse or a corgy surfing an asteroid as examples.

Since then, people have generated millions of pictures based on the craziest things they could imagine. Literally.

Today I’ve decided to take things to a whole level. I will run Stable Diffusion on the most Powerful GPU available to the public as of September of 2022. The Nvidia Tesla A100 with 80 Gb of HBM2 memory, a behemoth of a GPU based on the ampere architecture and TSM's 7nm manufacturing process. Yup, that’s the same ampere architecture powering the RTX 3000 series, except that the A100 is a datacenter-grade GPU, or how Nvidia themselves call it: An enterprise-ready Tensor Core GPU.

Unfortunately, I don’t have an A100 at home. Google’s got plenty for us to experiment with. However, using this type of GPU requires contacting Google Cloud’s customer support and submitting a quota increase request.

In my request, I explained that I wanted to use Stable Diffusion. Therefore I needed one A100 80GB in the us-central1 region. The A100 80GB is in public preview.

I already sent that request, Google approved it, and I am now ready to create a Linux VM with the most powerful GPU attached.

So in Google Compute Engine, I clicked “create a new VM” I went to the GPU section and selected the Nvidia A100 80GB; this will automatically configure an a2-ultragpu-1g machine type for me, which packs a 12vCPU and 170GB of RAM.

As for the boot drive, I will select one of the images under Deep learning on Linux.

Google Cloud will warn you if the selected boot drive image does not include CUDA drivers preinstalled. If you try to run Stable Diffusion UI in a VM without CUDA, it will fall back to CPU, making the image generation process atrociously slow.

With everything set, I will SSH into the monstrous VM and finish the installation of Nvidia’s drivers.

I could have partitioned the A100 down into multiple GPUs at this time, but that will be a test for another day.

I chose the Stable Diffusion UI repo as it comes with a bash script that will automatically download and install all the necessary dependencies. It also starts a web server ready to get prompts and spit out images.

Thanks to all the contributors of Stable Diffusion UI.

Without further ado, I will download the compressed package, unzip it, and run the startup script as instructed in the repo's README file. After a few minutes, the user interface UI will start in port 9000.

To see the UI, grab the ephemeral IP address of your VM from Google Compute Engine, paste it into the URL bar of your browser and add :9000

The first time you send a prompt, the server will download some stuff, so wait a couple more minutes before retrying.

And that’s pretty much it in terms of configuration to make Stable Diffusion run on the Nvidia A100.

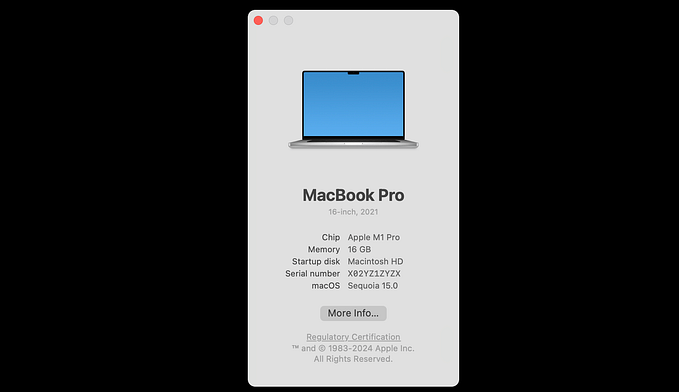

Even though I never managed to use all the available VRAM one thing was for sure about the A100, It runs faster than a VM using the T4 GPU and much MUUCH faster than my little apple M1 MacBook air.

If you want to replicate this experiment, there are three things that you have to keep in mind:

- You might have to create a firewall rule in your Google Cloud Project to allow access to the Stable Diffusion UI in port 9000.

- This deployment will cost you 1.15 dollars per hour or 836.72 after the sustained use discount.

- The 300-dollar free tier is not eligible for VMs with GPUs attached.

Now a brief comparison.

╔═══════════════════════╦═════════╦═══════════╦═══════════╗

║ Device ║ 512x512 ║ 1024x1024 ║ 1024x2048 ║

╠═══════════════════════╬═════════╬═══════════╬═══════════╣

║ MacBook Air M1 16Gb ║ 138 ║ DNF ║ DNF ║

║ Nvidia T4 16 GB GDDR6 ║ 36.96s ║ 281.01s ║ DNF ║

║ Nvidia A100 80Gb HBM2 ║ 14.05s ║ 27.03s ║ 92.78s ║

╚═══════════════════════╩═════════╩═══════════╩═══════════╝When generating a 2048x2048, the nvidia-smi command provides a deeper look at what's happening inside the A100.

Not even when asked to generate 20 2048x2048 images at once, the A100 gave up…

prompt: young female battle robot, award winning, portrait bust, symmetry, faded lsd colors, galaxy background, tim hildebrandt, wayne barlowe, bruce pennington, donato giancola, larry elmore, masterpiece, trending on artstation, cinematic composition, beautiful lighting, hyper detailed, Melancholic, Horrifying, 3D Sculpt, Blender Model, Global Illumination, Glass Caustics, 3D Render

seed: 1259654

num_inference_steps: 50

guidance_scale: 7.5

w: 2048

h: 2048

precision: autocast

save_to_disk_path: None

turbo: True

use_cpu: False

use_full_precision: True

use_face_correction: GFPGANv1.3

use_upscale: RealESRGAN_x4plus

show_only_filtered_image: True

device cudaUsing precision: full

Global seed set to 1259654

Sampling: 0%| | 0/1 [00:00<?, ?it/sseeds used = [1259654, 1259655, 1259656, 1259657, 1259658, 1259659, 1259660, 1259661, 1259662, 1259663, 1259664, 1259665, 1259666, 1259667, 1259668, 1259669, 1259670, 1259671, 1259672, 1259673]

Data shape for PLMS sampling is [20, 4, 256, 256]

Running PLMS Sampling with 50 timestepsPLMS Sampler: 6%|███▌ | 3/50 [10:10<2:26:11,186.63s/it]

This time it was me who DNF’ed out at 2:30 am after a long night of nerding around in GCP.

Hope you found this journey interesting.